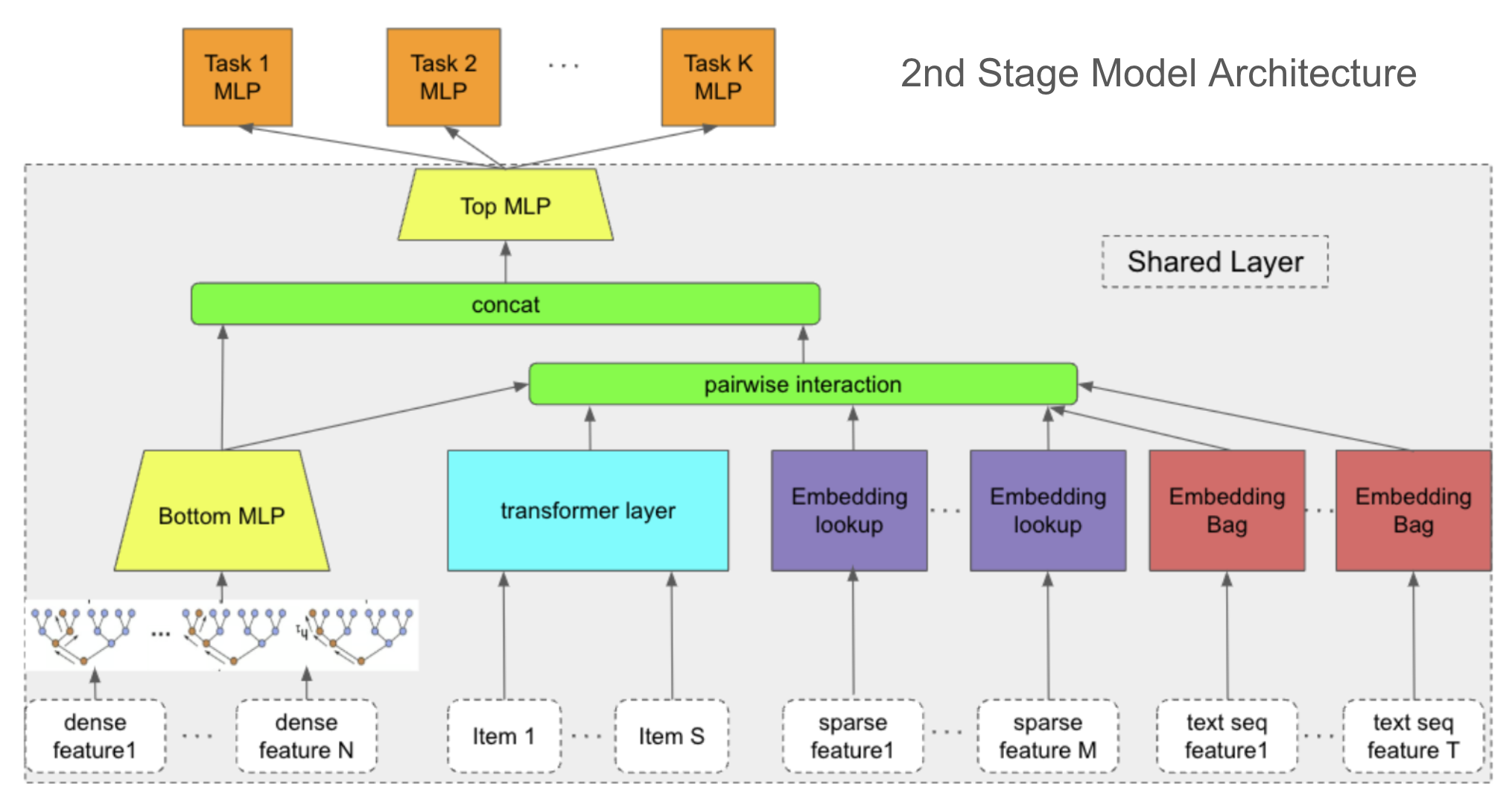

Second-Stage Ranking Model Architecture

A review of Promoted's systems for ML engineers

Promoted uses state-of-the-art deep neural networks for event prediction. It has the following characteristics:

Modularized feature transformations per feature type

Our current model has a modular architecture for four feature types:

- Dense features

- Float or integer numbers

- Provided by the customer via Requests or the CMS

- Produced by the real-time feature store for engagement counts, ratios, and times

- All dense features are grouped into one component

- We learn a Xgboost model as a feature transformation layer to transform the dense features into tree leave nodes. Then we build an MLP component on top of it

- Examples

- User age

- Time-to-Now in milliseconds

- count of clicks in the past 7 days for this user

- click-to-purchase rate in the past 30 days for this item

- count of follows for the seller of this item

- Float or integer numbers

- Sparse features

- Categorical, selected by heuristics (i.e., coverage > certain threshold) and by configuration

- Examples

- Item category

- Item grade

- Topic

- Theme

- Page/surface location

- Timezone

- Seller id

- User<> Item engagement sequence features

- These are multiple sequences of features that model real-time personalization.

- We use the user's recent N-engaged items enriched with engagement types and the timestamp of the engagement as input features. Then we apply a transformer layer on top of the sequence input

- Text features

- We model the text token as sparse IDs and learn a single embedding table for all text features

- Each type of input is represented as an embedding bag. The output layer is the sum of all embeddings in the bag

- Examples

- Search query

- Item title

- Item description

- We apply all or a subset of the above transformations depending on our customers' data.

Feature component interaction

After running the transformation of the input features, we build interaction layers on top of them. Outputs of all input components go through a pairwise interaction layer where we run pairwise dot product. Then, the outputs of the pairwise interaction layer are connected with the Bottom MLP (on top of dense features) into a flat layer. Then we apply another MLP on top of this flat concatenation layer.

Multi-task Learning for Clicks and Conversions

In search, feed, and ads recommendation, we need to predict the probabilities of multiple types of events, i.e., clicks, likes, saves, purchases, etc. We learn all events in a single model by building an MLP task layer for each task on the Top MLP layer. The task layer is only trained with the data for that specific task. The shared layer is trained with the data from all tasks. The multi-task architecture is particularly beneficial for tasks with very little data, like Purchase. Further, the total cost of ownership of training, managing, and creating training data is reduced. This contrasts the common approach of one model for each task where each model has its own “supporting cast”, including the data pipelines, training jobs, predictors, and computational resources.

This document is accurate as of Q3 2023.

Updated 6 months ago